AI-Driven User Experience Enhanced by Emotional Intelligence

In this article we will walk through how we at Vionlabs are using AI and emotional analysis to automatically create compelling artwork with emotional tags, allowing for highly engaging content discovery and in-depth analysis of what makes a user click a certain poster or preview. Learn more about how AINAR thumbnails are revolutionizing viewer engagement.

Vionlabs in-house AI has been developed to solve this challenge, focusing on the user interface of today and the future. With a strong focus on the format needed for the new generation of streamers. We understand that content is about storytelling, and we love storytelling, so we kept that in mind as we trained our AI networks to focus on how to learn a human way of engaging with stories in a video format.

Vionlabs preview clips are generated by utilizing Vionlabs unique fingerprint & emotional analysis data, generating new assets for the titles, and tagging up the assets with the most dominant emotion as tags example: angry, joyful, sad. These types of tags can be used everywhere, from using the right clip to represent the title’s mood or to personalize the clips and providing each user with the clip that fits them the best to optimize the engagement.

With emotional tags added to the preview clips we can generate preview clips with diversity and different emotions.

The process is as follows:

- Extract frame-by-frame information related to changes in a film’s color space to detect jump cuts

- Utilize the Vionlabs time-series-based information to detect important segments of the content

- Edit the film into clips at the points of these jump cuts and important segment markers

- We assign each clip an emotion tag by combining clips with their corresponding VAD data and identifying the dominant emotion over each one

- We constrain the list of clips to ones of sufficient length to capture attention (between 0:59 – 2 mins)

- We further constrain this to a minimum of 5 minutes past the film’s intro, and (usually) nothing in the last 10% – 30% of the film (to avoid credits and spoilers)

- 8-10 previews per asset, depending on the structure of the story

- Generating clips for an asset takes about 1-2 minutes in total and can scale horizontally

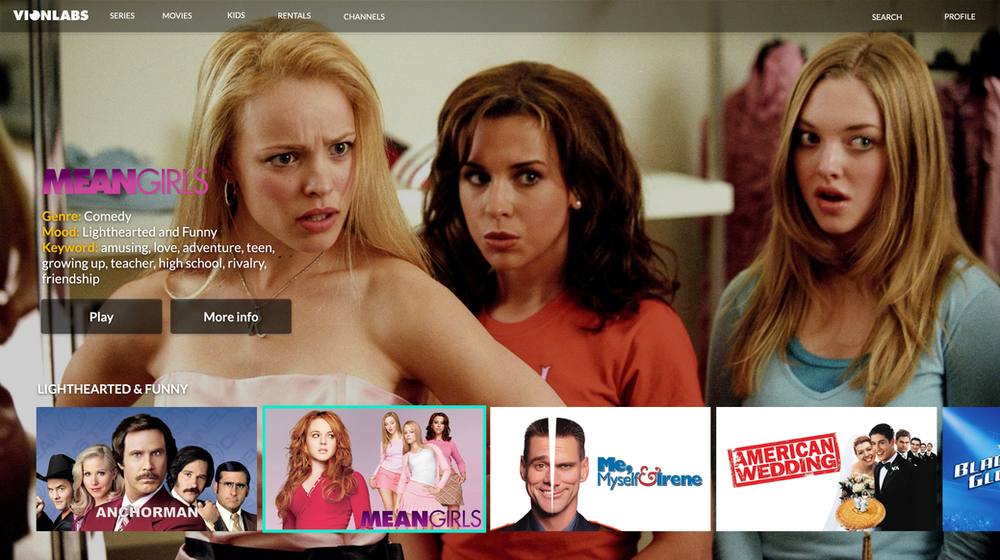

Combining the preview clips with the emotional tags, moods, and video story descriptors you get a really relevant, crisp, and engaging Visual Discovery experience:n

Personalized preview clips combined with Vionlabs Fingerprint Plus to create the essential presentation for getting viewers engaged. Exposing a high-quality personalized clip with predicted mood, genre & descriptive keywords.

AI-Driven User Experience Enhanced by Emotional Intelligence

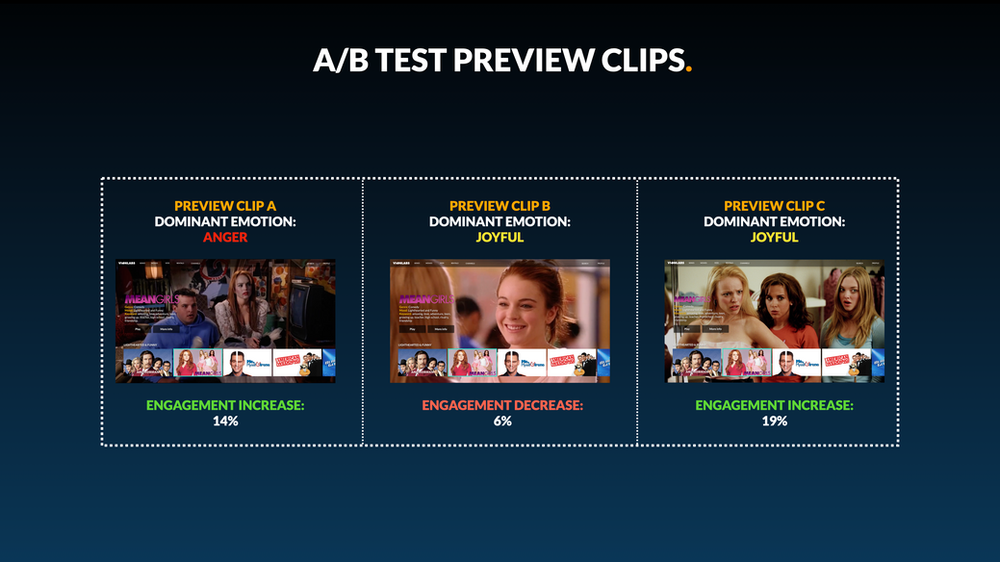

Artificial Intelligence and computer vision technology have enabled us to auto-generate previews and thumbnails for titles and add an emotional tag to the clip so you can determine the mood of the clip/thumbnails (Read more). But when you have all that data, what clip or thumbnail should you choose for what title? It is both challenging and time consuming to decide which asset that should represent a title. Therefore we suggest trying one of these two methods for selecting the clip:

1. A/B test the Preview clips/Thumbnails

and see which one has the best impact on your viewers, and from there, decide what thumbnail or clip should represent each title.

Use A/B test to identify which clip is generating the highest click-through rate, and then automatically use this clip as representation for a certain period of time. You should repeat this process periodically as user behavior and preferences change over time.

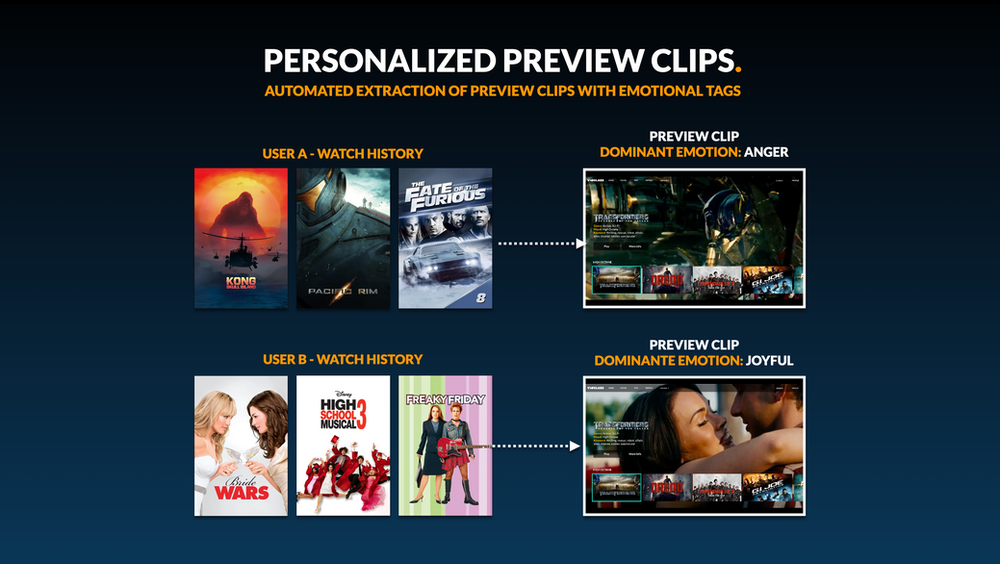

2. Personalize Preview clips/Thumbnails

User A gets the more angry clip and user B gets the more joyful clip.

Or you can choose to personalize the clips and thumbnails for each user based on their watch history. Choose the clips that have a more colorful and joyful mood for comedy lovers, and go for the darker clips with an angry mood for your action/thriller lovers.

Conclusion – Streamers expect more

The user’s expectations on what information should be available for engaging with an asset have changed and as we mentioned in part 1. Nowadays, there is almost a bigger chance of intimidating a user with too much information, scaring the user away instead of bringing them in. Relevance, “crispness” and diversity are at the heart of engaging today’s streamers. In just a few seconds, we need to accurately represent the content in such a way that the user can make a well-informed decision with minimum effort, not overwhelm them with irrelevant information.

FAQ: Vionlabs’ AI-Driven User Experience Enhanced by Emotional Intelligence

What is Vionlabs AI technology?

Vionlabs has developed advanced AI technology to enhance user engagement on streaming platforms. Their AI focuses on creating compelling artwork and preview clips with emotional tags to provide highly engaging content discovery experiences.

How does Vionlabs use emotional analysis in its AI?

Vionlabs’ AI uses emotional analysis to automatically generate thumbnails and preview clips tagged with dominant emotions such as angry, joyful, or sad. This helps in creating personalized and engaging content that resonates with viewers on an emotional level.

What are AINAR Thumbnails?

AINAR Thumbnails are AI-generated thumbnails designed to enhance viewer engagement. By selecting the most captivating and intriguing visuals, AINAR Thumbnails aim to capture viewer attention and reduce the likelihood of users skipping over titles they might enjoy.

How do Vionlabs preview clips work?

Vionlabs preview clips are generated using unique fingerprint and emotional analysis data. The process involves extracting frame-by-frame information, detecting important segments, editing the film into clips, and tagging each clip with the dominant emotion. This results in personalized preview clips that optimize viewer engagement.

What is the process for generating preview clips?

- Extract frame-by-frame information related to changes in a film’s color space to detect jump cuts.

- Utilize time-series-based information to detect important segments of the content.

- Edit the film into clips at the points of these jump cuts and important segment markers.

- Assign each clip an emotion tag by combining clips with their corresponding VAD data and identifying the dominant emotion.

- Constrain the list of clips to ones of sufficient length (between 0:59 – 2 mins).

- Further constrain this to a minimum of 5 minutes past the film’s intro, avoiding the last 10% – 30% of the film.

- Generate 8-10 previews per asset, depending on the story structure.

How long does it take to generate clips for an asset?

Generating clips for an asset takes about 1-2 minutes in total and can scale horizontally, making it a fast and efficient process.

How can I personalize preview clips and thumbnails for different users?

Personalizing preview clips and thumbnails can be done by using Vionlabs Fingerprint Plus to create high-quality personalized clips with predicted mood, genre, and descriptive keywords. You can A/B test different clips to determine which one has the best impact on your viewers or personalize the clips based on individual user preferences and watch history.

What are the benefits of using emotional tags in preview clips?

Emotional tags in preview clips help create a more engaging and relevant visual discovery experience. By accurately representing the mood and emotion of the content, viewers can make well-informed decisions with minimal effort, enhancing their overall streaming experience.

How do I implement A/B testing for preview clips and thumbnails?

To implement A/B testing, select different preview clips or thumbnails for the same title and measure their impact on viewer engagement. Use the clip that generates the highest click-through rate as the representation for a certain period. Repeat this process periodically to adapt to changing user behavior and preferences.

Why is it important to avoid overwhelming users with too much information?

Providing too much information can intimidate and overwhelm users, leading to disengagement. It’s crucial to present content in a way that is relevant, clear, and diverse, allowing users to make quick and informed decisions without being bombarded with unnecessary details.